Class 13: io_uring: I/O and Beyond¶

Date: 27.05.2025 Small Assignment

Intro¶

The newest I/O interface in Linux – io_uring introduced in 2019 – promised a highly-performant asynchronous input/output capabilities.

As the next Linux releases arrive, io_uring grows into something more: a whole new system calls interface,

circumventing most of their overhead just as well as auditing tools (which were taken by surprise).

Linux (and in general, any mainstream OS) was lacking a truly performant and widely-useful asynchronous I/O interface:

one in which you can ask for an operation (e.g., a write) to be performed without micromanaging a possibly blocking

or not ready descriptor in the userspace.

If one wanted to read data from a socket whenever it was available, the only option was to

either spawn a thread and block, or poll and just then ask for a copy.

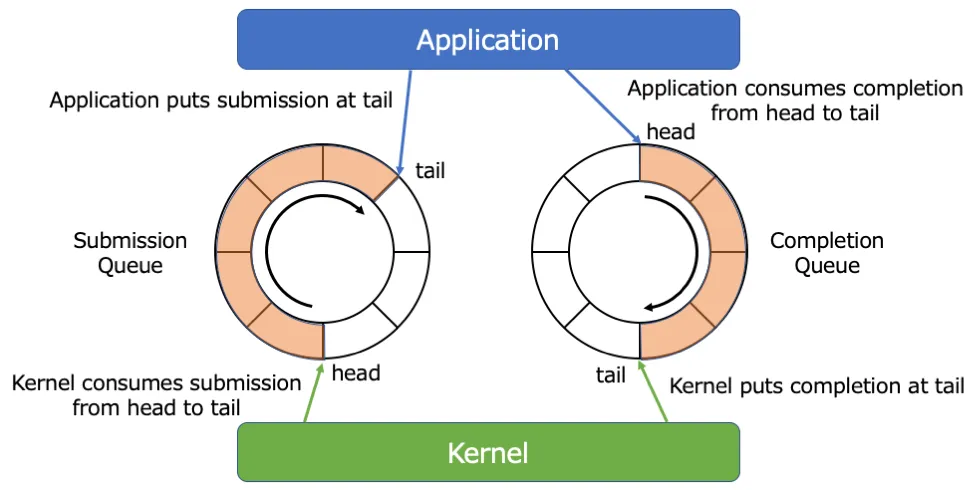

The core idea of io_uring is replacing the standard system call interface (i.e., a strict context change) with "multiprocessing" single-producer single-consumer (spsc) queues (also called "channels"). Such queues are very easy to implement: two userspace processes would just need a shared memory region and two atomic variables. Only the producer can modify (put) the data in the queue and update its tail, while the consumer can only read the data and update the head. Since the fastest way to implement a queue is to employ a ring buffer, hence the name: Input/Output User(space) Ring-buffer. As system calls must return success/error values, we need another such spsc queue for them.

Of course, we do not require these queues to be tightly coupled: it is just a way to feed stuff into the kernel and out of the kernel. Therefore, the queues actually represent a submission queue (sq) for tasks to be done, and a completion queue (cq) with their results. A task, once popped from the queue by the kernel, may live there for an arbitrary amount of time, and once completed, the kernel would push its status into the completion queue. However, as the subsystem evolved, the 1-to-1 mapping is already gone. A task may result in zero or more completion events, or a "completion" event may come without any task present.

Source: https://medium.com/nttlabs/rust-async-with-io-uring-db3fa2642dd4¶

The interface¶

The io_uring subsystem requires just three "proper" system calls:

- io_uring_setup

To prepare an io_uring instance, returning a file descriptor.

- io_uring_enter

To nudge the kernel that its queue has new entries or to sleep waiting for our entries. The manpage also lists the supported operations (tasks).

- io_uring_register

For auxiliary operations (multiplexed).

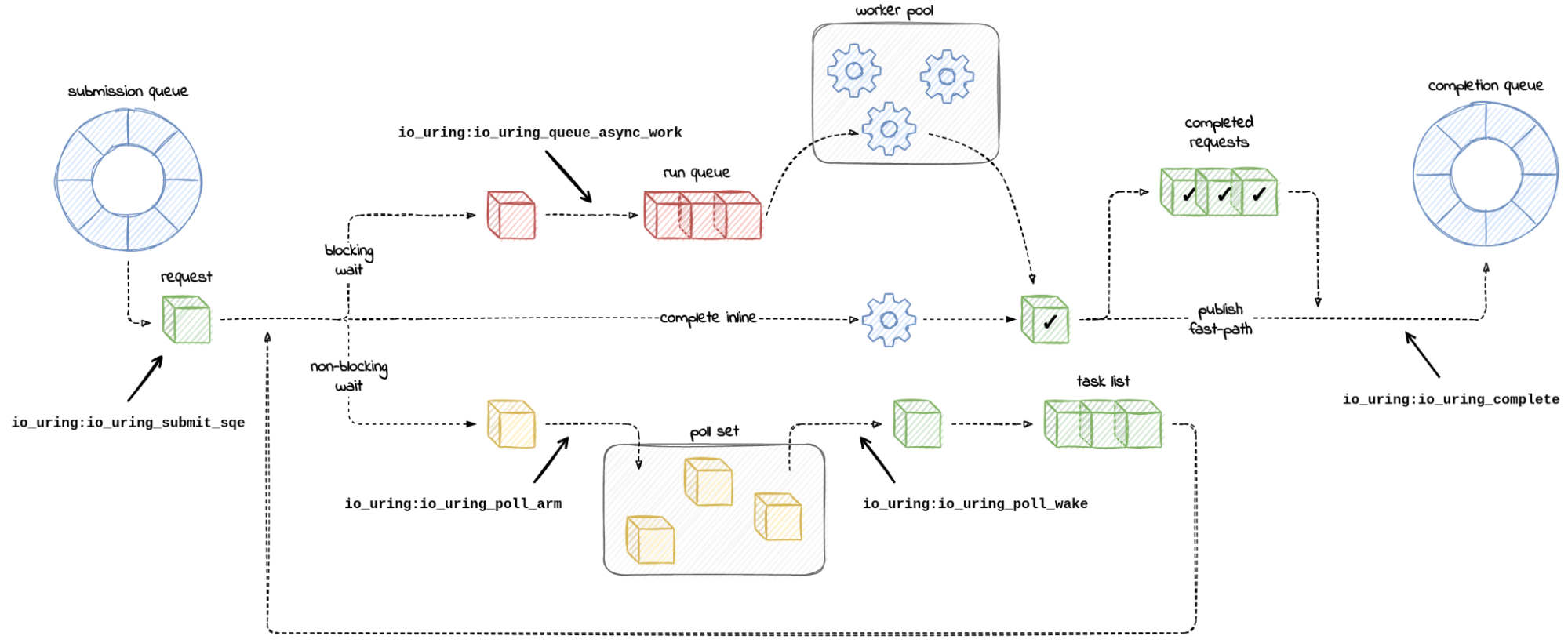

Instead of duplicating well-written documentation here, let's head together to the io_uring(7) manpage. The structures may be also found in the uapi headers for io_uring. The picture below represents what's happening on the kernel side.

Source: https://blog.cloudflare.com/missing-manuals-io_uring-worker-pool/¶

Hands-on

This interface allows for the user to ask the kernel to do some work. How could we use (hack) these facilities to do the other way around: allow the kernel to ask userspace for work?

This is very practical, as it allows effectively implementing things in userspace. FUSE (filesystem in userspace) is one example, but until recently there was no practical way to implement block devices in userspace. Now, there is ublk.

Liburing¶

As seen in the example, there is a lot of boilerplate code, and therefore there is a companion userspace library called liburing. The main documentation of the "raw" interface is also located there.

Hands-on

Before we proceed, make sure you have a working setup.

First, compile and run the raw-interface example found in the io_uring manpage. Then, compile and run an example from liburing.

You may need to install liburing headers:

sudo apt install liburing-dev

Or, even better, build the newest version from sources.

It's probably the wisest to use our system image.

Due to stability (and security) considerations, io_uring is often disabled or restricted to the superuser

(check /proc/sys/kernel/io_uring_disabled).

Moreover, some systems may simply have too old kernel version for the next tasks.

Possibilities¶

Operations¶

You can see a list of supported operations in the

io_uring_enter(2) manpage.

Most of them are a versions of standard i/o syscalls like write, preadv2, or recvmsg,

but we can also put a no-op IORING_OP_NOP, timeouts IORING_OP_TIMEOUT, or a message in another io_uring IORING_OP_MSG_RING. Operations may act as barriers in the task stream (the IOSQE_IO_DRAIN) flag, create a sequence of dependencies (IOSQE_IO_LINK), and many more.

See the link-cp example how

efficient copy may be implemented.

Hands-one

To explore the capabilities, modify the example liburing program to print "Hello World" after waiting for 3 seconds. Do this by preparing all io_uring tasks upfront.

Hint: consider

man 3 io_uring_prep_link_timeout,

just io_uring_prep_timeout, io_uring_prep_write, IORING_TIMEOUT_ETIME_SUCCESS flag, sqe->flags = some of IOSQE_IO_DRAIN or IOSQE_IO_LINK.

Registered files and buffers¶

io_uring subsystem has another two interesting features to mention here.

The first is a registry of file descriptors.

When we schedule a request for i/o operation, the kernel has to map the provided file descriptor to

whatever it actually represents (a file, a device, a socket, an io_uring, ...) which may be costly

and require synchronisation.

We can ask the subsystem to make this mapping only once with

io_uring_register(ring_fd, IORING_REGISTER_FILES, fd_array, fd_array_len).

Registered file(s descriptors) can be referenced by their index in the array,

instead of the fd, when we use the sqe->flags |= IOSQE_FIXED_FILE.

You may even close the original descriptor.

This operation effectively creates a new identifier space. As the next step, io_uring implemented operations that work solely in this space: we can open a file, write, fsync, and close it in a single linked sequence without ever creating a file descriptor!

The second feature to note is buffer registration.

This started similarly to file descriptors, as just an optimisation over mapping user buffers in the kernel

over and over again.

This evolved into registration of a set of buffers and letting the kernel IOSQE_BUFFER_SELECT

any of them for "read" operations!

This allows us to conserve a lot of memory while waiting on thousands of connections.

Read man 3 io_uring_register_buf_ring for details of the currently most efficient method.

IORING_OP_URING_CMD¶

The IORING_OP_URING_CMD issues an ioctl-like operation to the given file descriptor.

file_operations->ioctl_unlocked cannot be simply directly used, as that's inherently blocking operation,

and therefore would always require a separate kernel thread.

Instead, a new file operation was introduced:

int (*uring_cmd)(struct io_uring_cmd *ioucmd, unsigned int issue_flags);

As for the time of writing, only a handful implementors are present in the mainline: most notably sockets and ublk.

Multishot operations¶

Typically, one task creates just one Completion Queue Entry (cqe).

We can get zero by asking the kernel to skip success notifications with IORING_CQE_SKIP_SUCCESS.

More importantly, we can ask the task to continue being active after it handled an event.

System calls like poll and accept are traditionally ran in a loop.

With io_uring, we can turn them into multishot versions that would produce multiple CQEs.

Such entries are marked with IORING_CQE_F_MORE flag (in cqe->flags).

Small Assignment¶

Write a simple proof-of-concept of an echo-server implemented using

a single linked operations chain (therefore a single io_uring_submit too).

The server should:

prepare and listen on a socket,

accept a connection,

read data (once, possibly a fixed amount),

write that buffer back,

and finally close the connection.

Everything should be connected with IOSQE_IO_LINK.

Hints:

begin with traditional socket setup up to

io_uring_prep_accept_direct,IORING_OP_BINDlanded in 6.11 and needs newer liburing than is in the debian packages,you can test your solution with something like:

while :; do nc localhost 13131 <<<"Hello, echo?" && echo "closed"; sleep 1; done

Extra challenge!

If you have enjoyed this this task and attended the Distributed Systems course, here is a challenge for you:

Can you implement the Stable Storage primitive (atomic file update safe against crashes) as a single io_uring linked chain?

Remember about AF_ALG socket family for hashing :).

References¶

man 7 io_uring, man 2 io_uring_enter for a list of supported ops

io_uring subsystem on LXR for 6.12.6, uapi headers for io_uring

there is no authoritative listing of liburing functions, but you may look what links to man 3 io_uring_get_sqe

Efficient IO with io_uring – a longer introductory article, Jens Axboe, 2019

For updates, take a look at:

LWN articles about io_uring – however, many are about patches which didn't get to the mainline

Nick Black's wiki article – a concise, up-to-date summary of io_uring features

liburing GitHub wiki – 2023, up to 6.12

Missing Manuals - io_uring worker pool, Jakub Sitnicki, 2022

liburing examples, especially

io_uring-test.c,io_uring-cp.c,link-cp.c